Underwater sensor network positioning

Papers and Code

PiDR: Physics-Informed Inertial Dead Reckoning for Autonomous Platforms

Jan 06, 2026A fundamental requirement for full autonomy is the ability to sustain accurate navigation in the absence of external data, such as GNSS signals or visual information. In these challenging environments, the platform must rely exclusively on inertial sensors, leading to pure inertial navigation. However, the inherent noise and other error terms of the inertial sensors in such real-world scenarios will cause the navigation solution to drift over time. Although conventional deep-learning models have emerged as a possible approach to inertial navigation, they are inherently black-box in nature. Furthermore, they struggle to learn effectively with limited supervised sensor data and often fail to preserve physical principles. To address these limitations, we propose PiDR, a physics-informed inertial dead-reckoning framework for autonomous platforms in situations of pure inertial navigation. PiDR offers transparency by explicitly integrating inertial navigation principles into the network training process through the physics-informed residual component. PiDR plays a crucial role in mitigating abrupt trajectory deviations even under limited or sparse supervision. We evaluated PiDR on real-world datasets collected by a mobile robot and an autonomous underwater vehicle. We obtained more than 29% positioning improvement in both datasets, demonstrating the ability of PiDR to generalize different platforms operating in various environments and dynamics. Thus, PiDR offers a robust, lightweight, yet effective architecture and can be deployed on resource-constrained platforms, enabling real-time pure inertial navigation in adverse scenarios.

ResAlignNet: A Data-Driven Approach for INS/DVL Alignment

Nov 17, 2025Autonomous underwater vehicles rely on precise navigation systems that combine the inertial navigation system and the Doppler velocity log for successful missions in challenging environments where satellite navigation is unavailable. The effectiveness of this integration critically depends on accurate alignment between the sensor reference frames. Standard model-based alignment methods between these sensor systems suffer from lengthy convergence times, dependence on prescribed motion patterns, and reliance on external aiding sensors, significantly limiting operational flexibility. To address these limitations, this paper presents ResAlignNet, a data-driven approach using the 1D ResNet-18 architecture that transforms the alignment problem into deep neural network optimization, operating as an in-situ solution that requires only sensors on board without external positioning aids or complex vehicle maneuvers, while achieving rapid convergence in seconds. Additionally, the approach demonstrates the learning capabilities of Sim2Real transfer, enabling training in synthetic data while deploying in operational sensor measurements. Experimental validation using the Snapir autonomous underwater vehicle demonstrates that ResAlignNet achieves alignment accuracy within 0.8° using only 25 seconds of data collection, representing a 65\% reduction in convergence time compared to standard velocity-based methods. The trajectory-independent solution eliminates motion pattern requirements and enables immediate vehicle deployment without lengthy pre-mission procedures, advancing underwater navigation capabilities through robust sensor-agnostic alignment that scales across different operational scenarios and sensor specifications.

A Comprehensive Survey on Underwater Acoustic Target Positioning and Tracking: Progress, Challenges, and Perspectives

Jun 17, 2025Underwater target tracking technology plays a pivotal role in marine resource exploration, environmental monitoring, and national defense security. Given that acoustic waves represent an effective medium for long-distance transmission in aquatic environments, underwater acoustic target tracking has become a prominent research area of underwater communications and networking. Existing literature reviews often offer a narrow perspective or inadequately address the paradigm shifts driven by emerging technologies like deep learning and reinforcement learning. To address these gaps, this work presents a systematic survey of this field and introduces an innovative multidimensional taxonomy framework based on target scale, sensor perception modes, and sensor collaboration patterns. Within this framework, we comprehensively survey the literature (more than 180 publications) over the period 2016-2025, spanning from the theoretical foundations to diverse algorithmic approaches in underwater acoustic target tracking. Particularly, we emphasize the transformative potential and recent advancements of machine learning techniques, including deep learning and reinforcement learning, in enhancing the performance and adaptability of underwater tracking systems. Finally, this survey concludes by identifying key challenges in the field and proposing future avenues based on emerging technologies such as federated learning, blockchain, embodied intelligence, and large models.

Aucamp: An Underwater Camera-Based Multi-Robot Platform with Low-Cost, Distributed, and Robust Localization

Jun 11, 2025

This paper introduces an underwater multi-robot platform, named Aucamp, characterized by cost-effective monocular-camera-based sensing, distributed protocol and robust orientation control for localization. We utilize the clarity feature to measure the distance, present the monocular imaging model, and estimate the position of the target object. We achieve global positioning in our platform by designing a distributed update protocol. The distributed algorithm enables the perception process to simultaneously cover a broader range, and greatly improves the accuracy and robustness of the positioning. Moreover, the explicit dynamics model of the robot in our platform is obtained, based on which, we propose a robust orientation control framework. The control system ensures that the platform maintains a balanced posture for each robot, thereby ensuring the stability of the localization system. The platform can swiftly recover from an forced unstable state to a stable horizontal posture. Additionally, we conduct extensive experiments and application scenarios to evaluate the performance of our platform. The proposed new platform may provide support for extensive marine exploration by underwater sensor networks.

Learning-Based Leader Localization for Underwater Vehicles With Optical-Acoustic-Pressure Sensor Fusion

Feb 28, 2025

Underwater vehicles have emerged as a critical technology for exploring and monitoring aquatic environments. The deployment of multi-vehicle systems has gained substantial interest due to their capability to perform collaborative tasks with improved efficiency. However, achieving precise localization of a leader underwater vehicle within a multi-vehicle configuration remains a significant challenge, particularly in dynamic and complex underwater conditions. To address this issue, this paper presents a novel tri-modal sensor fusion neural network approach that integrates optical, acoustic, and pressure sensors to localize the leader vehicle. The proposed method leverages the unique strengths of each sensor modality to improve localization accuracy and robustness. Specifically, optical sensors provide high-resolution imaging for precise relative positioning, acoustic sensors enable long-range detection and ranging, and pressure sensors offer environmental context awareness. The fusion of these sensor modalities is implemented using a deep learning architecture designed to extract and combine complementary features from raw sensor data. The effectiveness of the proposed method is validated through a custom-designed testing platform. Extensive data collection and experimental evaluations demonstrate that the tri-modal approach significantly improves the accuracy and robustness of leader localization, outperforming both single-modal and dual-modal methods.

Optoacoustic Signal-Based Underwater Node Localization Technique: Overcoming GPS Limitations without AUV Requirements

Jun 10, 2023

Underwater sensor networks are anticipated to facilitate numerous commercial and military applications. Moreover, precise self-localization in practical underwater scenario is a crucial challenge in sensor networks because of the complexity of deploying sensor points in specific locations. The Global Positioning System (GPS) is inappropriate for underwater localization because saline water may severely attenuate signal, limiting penetration capacity to barely a few meters. Hence, the most promising alternative to wireless radio wave transmissions for underwater networks is regarded as acoustic communication. In order to establish an underwater localization model, traditional techniques that essentially include surface gateways or intermediate anchor nodes create logistical challenges and security hazards when the surface access points are deployed. This paper proposes a unique localization method that employs optoacoustic signals to remotely localize underwater wireless sensor networks in order to address these concerns. In our model, the GPS signals are transmitted from the air to the underwater node through a mobile beacon that employs optoacoustic techniques to generate a short-term isotropic acoustic signal source. Underwater nodes with omnidirectional receivers can detect their location in random and realistic environments by comparing signal levels from two equivalent plasmas, also referred as acoustic source nodes. Finally, the software simulation results in comparison to the proposed theoretical model highlight the feasibility of our approach. In comparison to complicated traditional node localization approach, our simplified approach is anticipated to achieve better accuracy with only a single off-water controlled node, while avoiding the additional requirement for surface anchor nodes.

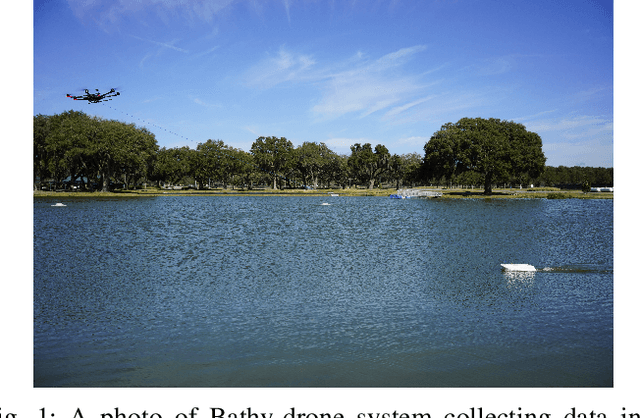

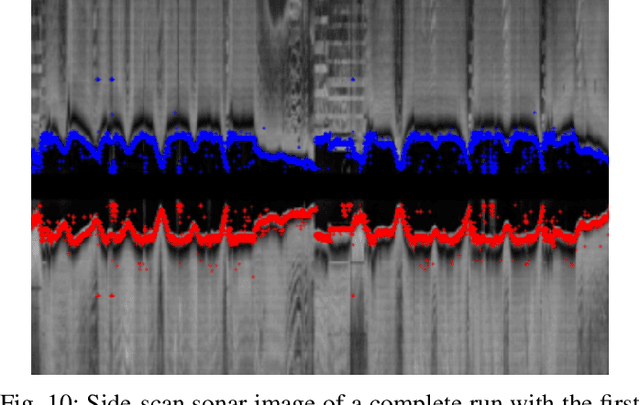

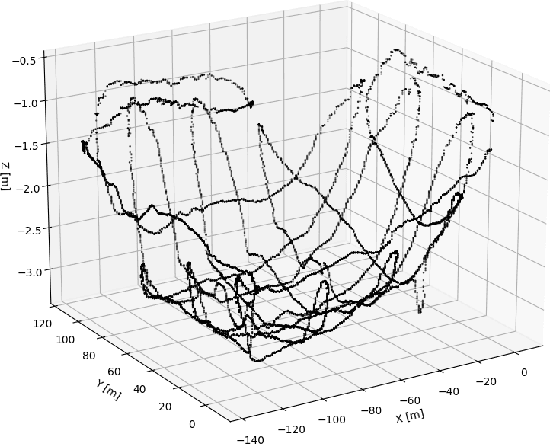

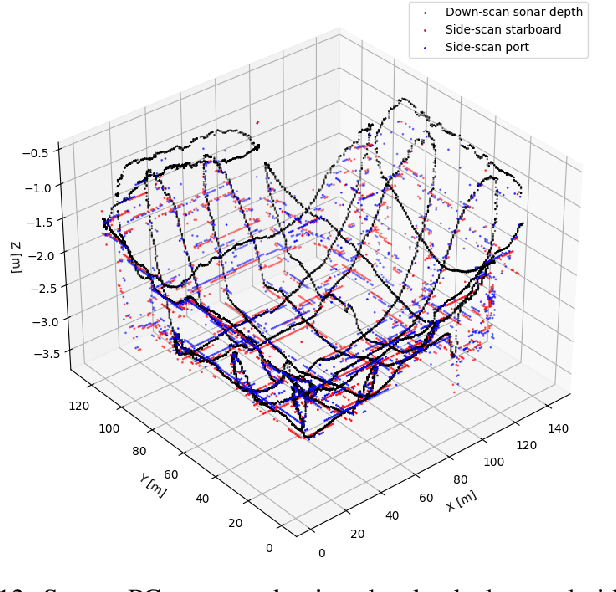

Time and Cost-Efficient Bathymetric Mapping System using Sparse Point Cloud Generation and Automatic Object Detection

Oct 19, 2022

Generating 3D point cloud (PC) data from noisy sonar measurements is a problem that has potential applications for bathymetry mapping, artificial object inspection, mapping of aquatic plants and fauna as well as underwater navigation and localization of vehicles such as submarines. Side-scan sonar sensors are available in inexpensive cost ranges, especially in fish-finders, where the transducers are usually mounted to the bottom of a boat and can approach shallower depths than the ones attached to an Uncrewed Underwater Vehicle (UUV) can. However, extracting 3D information from side-scan sonar imagery is a difficult task because of its low signal-to-noise ratio and missing angle and depth information in the imagery. Since most algorithms that generate a 3D point cloud from side-scan sonar imagery use Shape from Shading (SFS) techniques, extracting 3D information is especially difficult when the seafloor is smooth, is slowly changing in depth, or does not have identifiable objects that make acoustic shadows. This paper introduces an efficient algorithm that generates a sparse 3D point cloud from side-scan sonar images. This computation is done in a computationally efficient manner by leveraging the geometry of the first sonar return combined with known positions provided by GPS and down-scan sonar depth measurement at each data point. Additionally, this paper implements another algorithm that uses a Convolutional Neural Network (CNN) using transfer learning to perform object detection on side-scan sonar images collected in real life and generated with a simulation. The algorithm was tested on both real and synthetic images to show reasonably accurate anomaly detection and classification.

Design of an Optimal Testbed for Tracking of Tagged Marine Megafauna

Apr 08, 2022

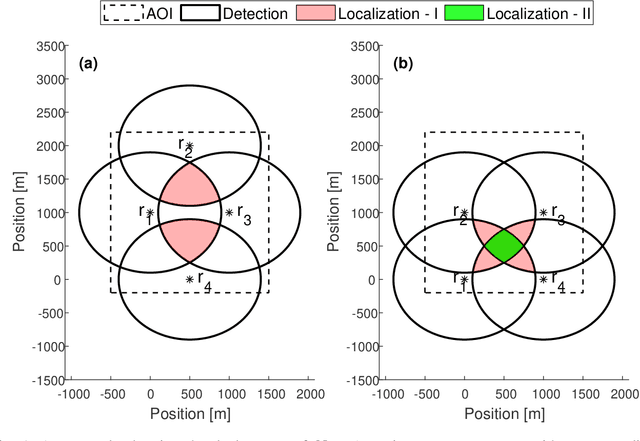

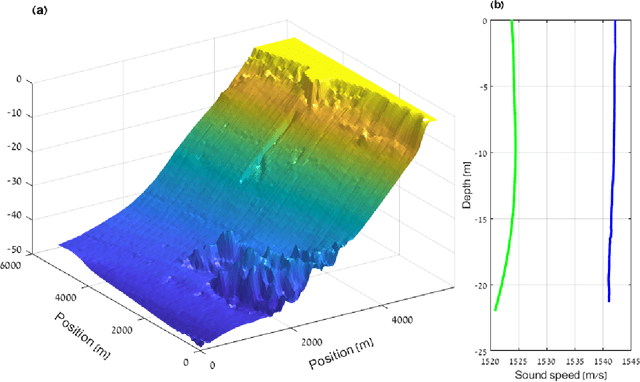

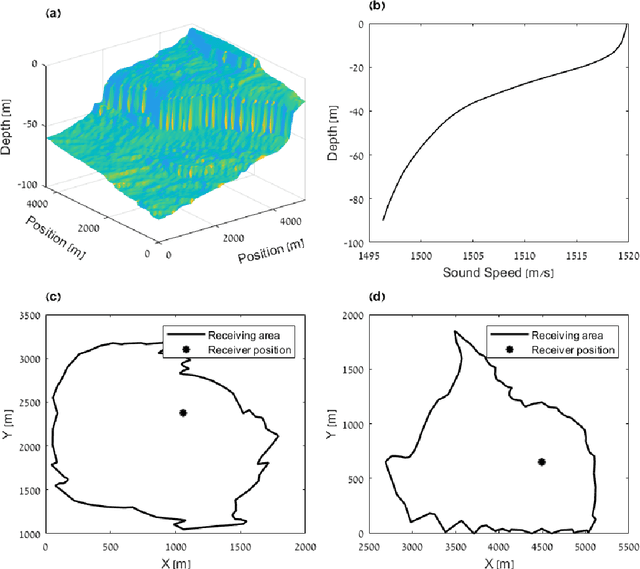

Underwater acoustic technologies are a key component for exploring the behavior of marine megafauna such as sea turtles, sharks, and seals. The animals are marked with acoustic devices (tags) that periodically emit signals encoding the device's ID along with sensor data such as depth, temperature, or the dominant acceleration axis - data that is collected by a network of deployed receivers. In this work, we aim to optimize the locations of receivers for best tracking of acoustically tagged marine megafauna. The outcomes of such tracking allows the evaluation of the animals' motion patterns, their hours of activity, and their social interactions. In particular, we focus on how to determine the receivers' deployment positions to maximize the coverage area in which the tagged animals can be tracked. For example, an overly-condensed deployment may not allow accurate tracking, whereas a sparse one, may lead to a small coverage area due to too few detections. We formalize the question of where to best deploy the receivers as a non-convex constraint optimization problem that takes into account the local environment and the specifications of the tags, and offer a sub-optimal, low-complexity solution that can be applied to large testbeds. Numerical investigation for three stimulated sea environments shows that our proposed method is able to increase the localization coverage area by 30%, and results from a test case experiment demonstrate similar performance in a real sea environment. We share the implementation of our work to help researchers set up their own acoustic observatory.

A Deep Learning Approach To Dead-Reckoning Navigation For Autonomous Underwater Vehicles With Limited Sensor Payloads

Oct 01, 2021

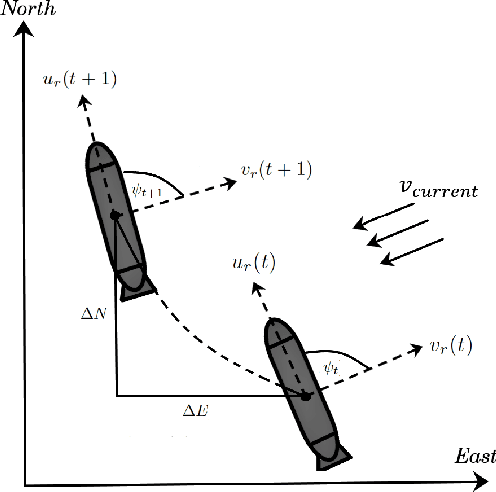

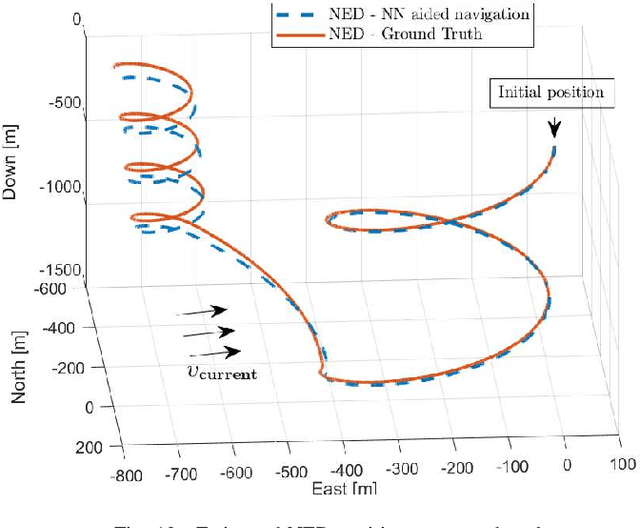

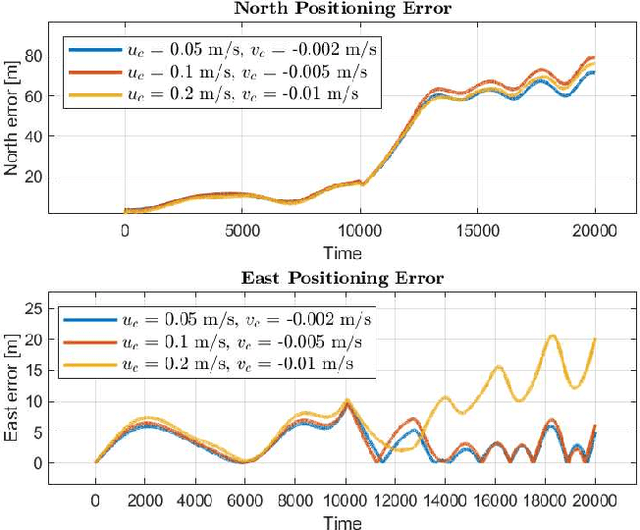

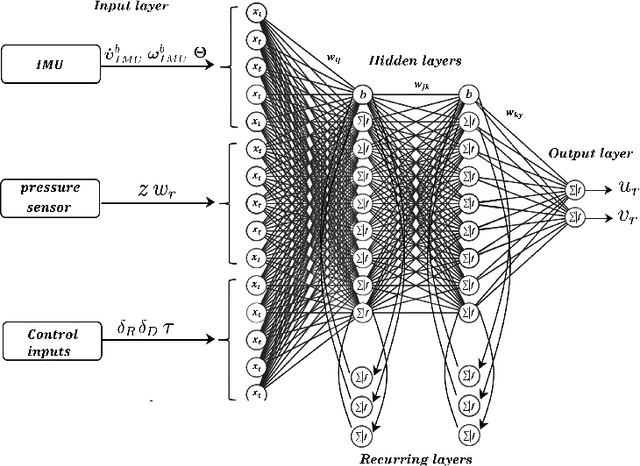

This paper presents a deep learning approach to aid dead-reckoning (DR) navigation using a limited sensor suite. A Recurrent Neural Network (RNN) was developed to predict the relative horizontal velocities of an Autonomous Underwater Vehicle (AUV) using data from an IMU, pressure sensor, and control inputs. The RNN network is trained using experimental data, where a doppler velocity logger (DVL) provided ground truth velocities. The predictions of the relative velocities were implemented in a dead-reckoning algorithm to approximate north and east positions. The studies in this paper were twofold I) Experimental data from a Long-Range AUV was investigated. Datasets from a series of surveys in Monterey Bay, California (U.S) were used to train and test the RNN network. II) The second study explore datasets generated by a simulated autonomous underwater glider. Environmental variables e.g ocean currents were implemented in the simulation to reflect real ocean conditions. The proposed neural network approach to DR navigation was compared to the on-board navigation system and ground truth simulated positions.

Cooperative Localization and Multitarget Tracking in Agent Networks with the Sum-Product Algorithm

Aug 05, 2021

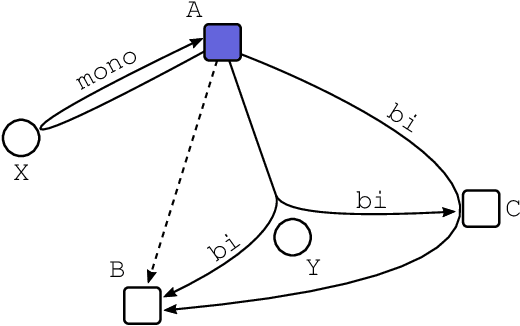

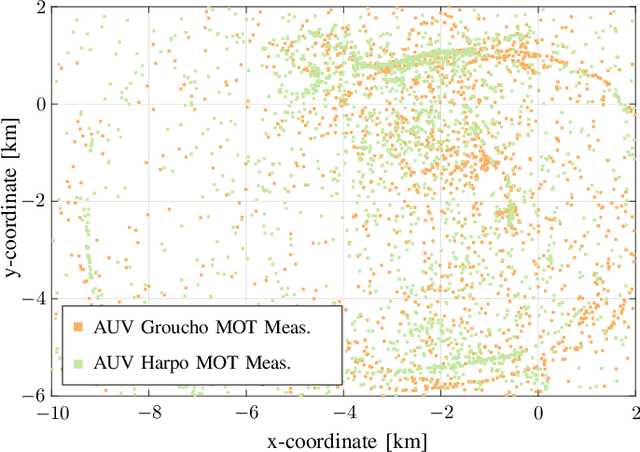

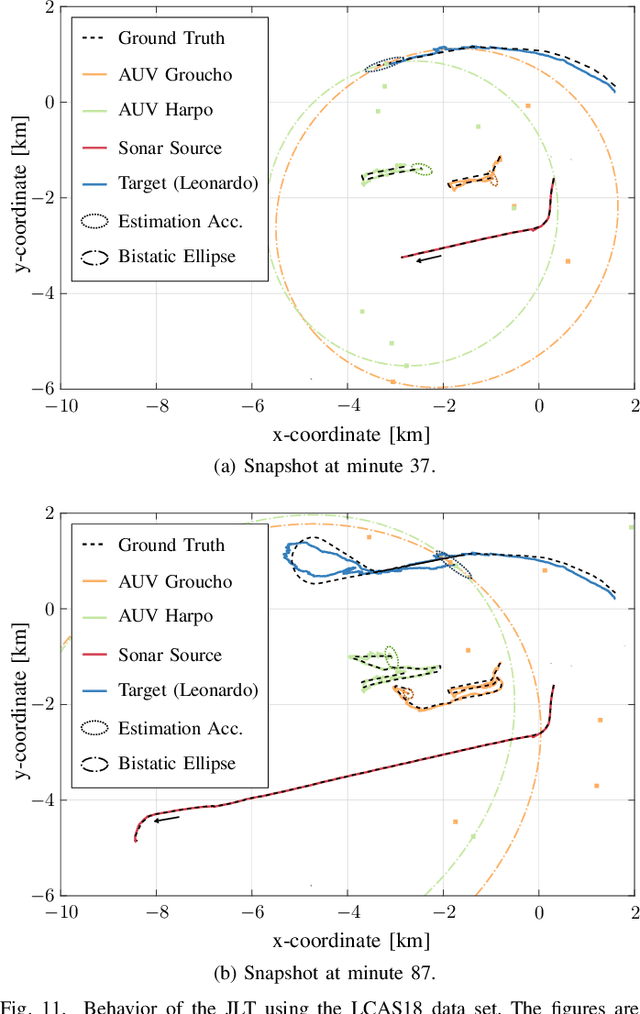

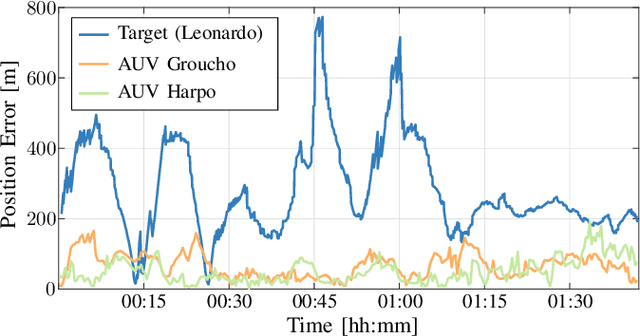

This paper addresses the problem of multitarget tracking using a network of sensing agents with unknown positions. Agents have to both localize themselves in the sensor network and, at the same time, perform multitarget tracking in the presence of clutter and target miss detection. These two problems are jointly resolved in a holistic approach where graph theory is used to describe the statistical relationships among agent states, target states, and observations. A scalable message passing scheme, based on the sum-product algorithm, enables to efficiently approximate the marginal posterior distributions of both agent and target states. The proposed solution is general enough to accommodate a full multistatic network configuration, with multiple transmitters and receivers. Numerical simulations show superior performance of the proposed joint approach with respect to the case in which cooperative self-localization and multitarget tracking are performed separately, as the former manages to extract valuable information from targets. Lastly, data acquired in 2018 by the NATO Science and Technology (STO) Centre for Maritime Research and Experimentation (CMRE) through a network of autonomous underwater vehicles demonstrates the effectiveness of the approach in practical applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge